[Tag search]

Thursday 9 February 2023, 00:00

Sam Kriss has a Substack posting in which he describes the

zairja of the world and then links it to his ideas on why AI is

getting worse. Basically, what I get from the piece is that he's

saying successive generations of GPT models have produced less and less

valuable output as they better and better approximate the average

content of the World Wide Web. Nearly all of the Web is garbage, and

so an approximation of the average Web document is garbage too. The

output from older models is weird and interesting because more random

and less accurately approximating the average Web document; the output

from new models is boring and worthless. My own view is that these

issues are not as serious or as inevitable as he presents them. There

seem to be some gaps in Kriss's understanding of how language models,

and the ChatGPT Web service in particular, work; and filling in the

gaps points to some natural solutions for the problem he describes.

Sunday 25 September 2022, 16:13

There are a number of directions from which we can look at current

developments in deep neural networks and the issues I raised in my streamed

comments on pirate AI.

Here's a summary of the implications I see from the perspective of the Unix

philosophy.

Sunday 11 September 2022, 00:00

I've been thinking a lot recently about the current developments in

deep neural network generative models, and how they implicate free

software issues. I went into a lot of this stuff in my July 25 Twitch

stream, and although I'd also like to write up some of my thoughts in a more

organized way, the transcript of the relevant video content is a pretty

decent introduction in itself, so I thought I'd post it here.

This is an automatically generated transcript (by another of these

models!), with a fair bit of manual editing - so it's spoken English written

down, not the usual standard form of written English. The video itself will

go online eventually, but it may be a long time before that happens, because

my video archive server is at capacity now and I probably won't expand it

until the Twitch stream gets enough viewers I can sell some subscriptions to

pay the bills.

Sunday 31 July 2022, 17:00

This is the output from one of my text-generation AI experiments. I

started with the GPT-J 6B model, and fine-tuned it on about 200,000 words of

my own fiction writing (about half of that being the text of Shining Path). It took about 36

hours to fine-tune the model on my 12-core desktop PC, notably not

using GPU computation, and then maybe 12 hours or so to generate

this text under human supervision.

Saturday 25 March 2017, 12:30

I've posted

some notes on the computer-science aspects of

reporting rounded numbers in financial statements and hoping for the totals

to add up correctly. You've doubtless seen footnotes on things that say

"Totals may not add due to rounding." Can that be avoided? Here's a

detailed examination of the question.

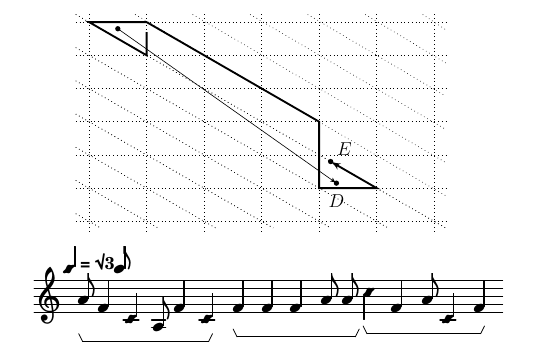

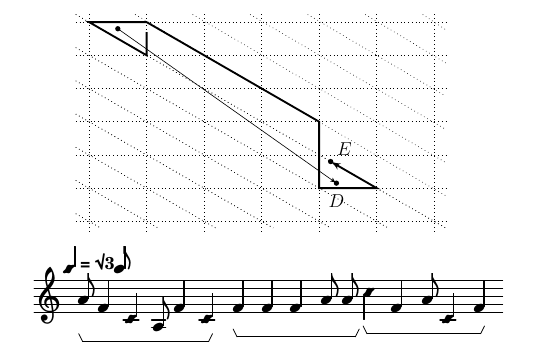

Sunday 2 August 2015, 04:09

I have posted a

detailed set of notes (PDF file)

describing the theory behind my Black Swan Suite, detailing

the endless chase of Elmer and Daffy across Penrose, pinwheel, and other

nonperiodic tilings of the plane. Fans of

music and computational geometry may find the document interesting. At the

very least, it was fun to typeset.

Thursday 30 October 2014, 12:16

In January of 2011, I had recently arrived at the University of Manitoba

to work as a postdoc with Stephane Durocher. One of the first things he

asked me to do was find out how many cycles there are in an n-dimensional

hypercube graph, for general n. At the time, he and I both assumed that

that meant spending maybe half an hour in the library looking up the

answer.

Since then it's been more than three years; thousands of lines of

computer code and months of CPU time; we added two more co-authors; we

didn't solve the original problem, and didn't completely solve the

other problem that we ended up working on, either; but here's a journal

paper, anyway, and I think it's pretty interesting. The official definitive

version will be free to access until mid-December and then will go

behind Elsevier's paywall; the authors' accepted manuscript on

arXiv is permanently accessible.

Read the paper if you're interested in the math details; in this entry

I'm going to try to tell the story behind the paper, in relatively

non-mathematical terms. I'm hoping it'll be of interest to my Web log

readers and give you some idea, because I often get asked this, of what I

actually do at work.

Monday 10 February 2014, 08:39

The text below pretty much speaks for itself. Bold highlighting and

numbered footnotes in [square brackets] are mine; all the rest is as I

received it. Some irregularities of spacing and punctuation, visible in the

original email, aren't obvious in the HTML. Names of the students are

redacted because (after finding several more copies on the Web) I imagine

the students are relatively innocent victims of bad advice. Name of the

institution not redacted because I hope others who receive such letters and

look for them on the Web will be able to easily find this posting.

Wednesday 21 August 2013, 12:03

I just got back from a trip to multiple conferences in Ontario, and that

makes it a good time to update my

publications page. Most

people interested in my academic work are likely to find out about it from

other sources, but I'm going to post some brief and relatively non-technical

introductions here as well for my general Web site readers. The official

versions of these papers are mostly behind paywalls, but there are also

unofficial "preprint" versions available to all.

Wednesday 30 January 2013, 18:44

Here are the

slides (PDF) and an audio recording (MP3, 25 megabytes,

54 minutes) from a talk I gave today about one of my research projects.

You'll get more out of it if you have some computer science background, but

I hope it'll also be accessible and interesting to those of my readers who don't.

I managed to work in Curious George, Sesame Street, electronics, XKCD, the

meaning of "truth," and a piece of software called ECCHI.

I plan to distribute the "Enhanced Cycle Counter and

Hamiltonian Integrator" publicly at some point in the future. Maybe not

until after the rewrite, though.

Abstract for the talk:

It is a #P-complete problem to find the number of subgraphs

of a given labelled graph that are cycles. Practical work on this

problem splits into two streams: there are applications for counting

cycles in large numbers of small graphs (for instance, all 12.3

million graphs with up to ten vertices) and software to serve that

need; and there are applications for counting the cycles in just a few

large graphs (for instance, hypercubes). Existing automated techniques

work very well on small graphs. In this talk I review my own and

others' work on large graphs, where the existing results have until

now required a large amount of human participation, and I discuss an

automated system for solving the problem in large graphs.

Friday 10 August 2012, 21:00

Here's a photo gallery (salvaged from my old gallery software in July

2020) of my trip to the Canadian Conference on Computational Geometry in

Charlottetown, PEI.

Friday 6 July 2012, 21:00

Here's a photo gallery (salvaged from my old gallery software in July

2020) of my trip to the Canadian Conference on Computational Geometry in

Helsinki.

Monday 5 December 2011, 14:56

I encountered an interesting problem on the Tsukurimashou project recently, and some inquiries with friends confirmed my suspicion that if anyone has solved it, they've done it in a language-specific way for ridiculous languages. It appears I have to solve it again myself. Here are some notes.

Wednesday 17 August 2011, 15:02

Now online: photo gallery, PDF presentation slides, and MP3 audio recording of my talk from my recent Toronto trip.

Wednesday 31 December 1969, 16:00

Anyone who's studied computer science should be familiar with the Landau

asymptotic notation, or "big-oh" notation. But how well do you really know

it?

Given two functions it seems intuitive that one at least one ought to be

big-oh of the other; in some cases maybe they are both big-oh of each other,

in which case they are theta of each other and in a certain sense can be

said to be equivalent. It sure feels like a total order, with a place for

everything and everything in its place, and it is a total order on all the

kinds of functions we normally care about. I've certainly marked down

plenty of students for incorrectly claiming that

n log n can't be compared either way to things like

n and n2.

But today I had occasion to wonder if it's really true that O() induces a total order on all functions for which it is applicable. I looked on the Net and couldn't find anyone discussing this issue; I did find this archived final exam in which someone asked it of students as a true/false question and apparently was looking for the answer "true." Unfortunately, the correct answer is "false."

Counterexample below the cut. If you've got a computer science background you might want to think about it a bit yourself before going further. It shouldn't really be hard if you look carefully at the definition.

Thursday 9 December 2010, 20:57

It's not like I don't have enough projects to work on already. Nonetheless, I had an idea I thought was pretty cool, and I'm going to at least describe it here, whether I end up implementing it or not.

Okay, so: kerning. If you're setting type, you need to know where to put each glyph in relation to the previous one. In the old old days, it was easy because each glyph came on a little metal block and you'd just set them right next to each other and clamp them in place. But a computer (and, earlier, a phototypesetting machine) has the opportunity to make a decision. And if you just have a fixed bounding box for each glyph and set them side by side, you run into problems when you have situations like a capital A next to a capital V. "AV". Using the bounding boxes that would be correct for those letters in other contexts, you end up putting too much space between them. You need to squeeze them together so that part of the V projects into the space that the A, if it were next to something else, would reserve for itself. This squeezing together is called "kerning."

Tuesday 26 October 2010, 22:33

I wrote before about the writing style analysis toy; at that time I said the "blogosphere" wasn't ready for such technology, and I still believe that, but I recently did something sort of related that might interest you, and the stakes are a little lower, so I'm going to share it here.

The thing is, in my novel draft, there are 45 chapters, and some of them are deliberately written in different styles from others. I thought it'd be interesting to see if I could detect that statistically. I apologize for not posting the actual text here - you'll have to wait until the book is published - but I'll at least give you the raw numbers to play with and walk you through the analysis.

Monday 19 July 2010, 19:26

Hi! I'm a scientific researcher. I have a PhD in computer science. My doctoral dissertation is mostly about the mathematical background of "similarity search." That means looking at things to find other things they are similar to. I've travelled the world to present my work on similarity search at scientific conferences - and some very smart people with very limited funds chose to use those funds to pay for me to do that.

Argument from authority has its limitations, but I would like to make very clear: I am an expert in the specific area of how computers can answer questions of the form "Which thing does this thing most resemble?" Gee, why would I mention this right now?